Deepfakes, AI‑generated media that mimic real people’s voices and likenesses, have moved from niche tech demos to major digital threats. Deepfake content shared online climbed from roughly 500,000 clips in 2023 to an expected 8 million, highlighting an explosive growth in misuse. These synthetic videos and audios are now used in scams, political misinformation, and fraud schemes that affect corporations, elections, and everyday users. From banking fraud to fake celebrity endorsements, deepfakes are reshaping cybersecurity priorities across sectors. Explore the stats below to understand where it stands in the evolution of this critical issue.

Editor’s Choice

- Deepfake files online are projected to reach 8 million in 2025, up from 500,000 in 2023.

- Deepfake fraud attempts spiked 3,000% in 2023.

- Human detection of high‑quality deepfake videos is just ~24.5% accurate.

- 179 reported deepfake incidents in Q1 2025, up 19% vs all of 2024.

- 49% of businesses globally encountered audio/video deepfake fraud by 2024.

- Deepfake detection market projected to reach $5.6 billion by 2034.

- FBI notes 4.2 million+ fraud reports since 2020, with deepfake scams increasing losses.

Recent Developments

- AI tools producing hyper‑realistic videos (e.g., riots and election fabrications) have made deepfakes easier to create and share.

- Italy’s privacy watchdog warned against AI‑generated non‑consensual imagery, citing rising misuse.

- Scotland and Wales are piloting detection software for elections to identify manipulated media.

- The FBI warns of AI‑generated virtual kidnapping scams using deepfake content to demand ransom.

- Regulatory moves like the TAKE IT DOWN Act aim to curb non‑consensual deepfakes.

- High‑profile deepfakes (e.g., of investment icon Warren Buffett) highlight fraud risks on social platforms.

- Research shows deepfakes now mimic faces, voices, and expressions with alarming realism.

- Authorities globally coordinate to trace and prosecute deepfake distribution channels.

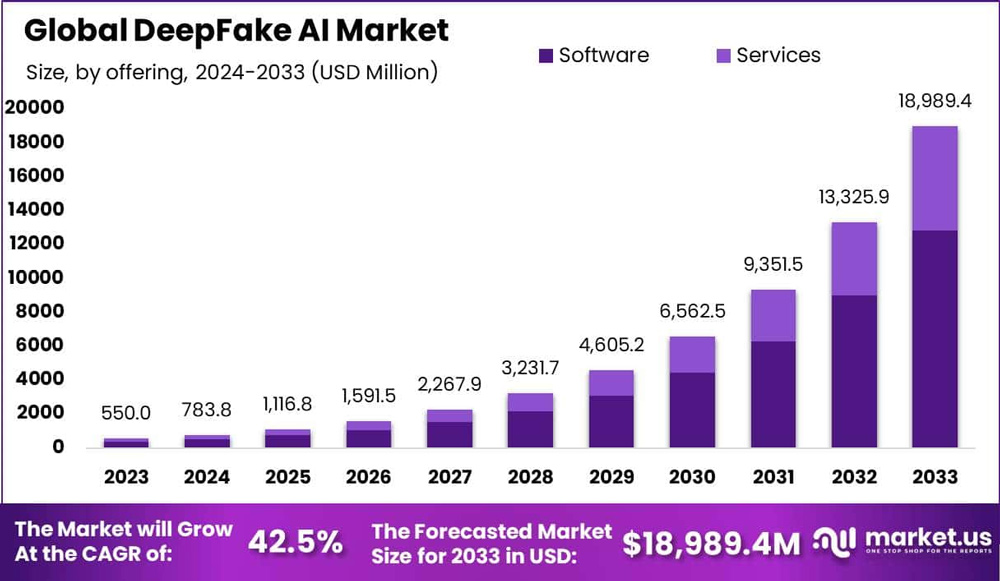

Global DeepFake AI Market Growth Overview

- The Global DeepFake AI market is projected to expand from $550.0 million in 2023 to $18,989.4 million by 2033, highlighting rapid industry-scale growth.

- The market is forecast to grow at a strong CAGR of 42.5%, positioning DeepFake AI among the fastest-growing AI segments globally.

- Market size increases steadily from $783.8 million in 2024 to $1,116.8 million in 2025, reflecting early-stage commercial adoption.

- By 2026, the market will reach $1,591.5 million, signaling expanding enterprise and media usage of DeepFake technologies.

- Growth accelerates sharply after 2027, with market value rising to $2,267.9 million and crossing $3,231.7 million in 2028.

- The industry surpasses $4,605.2 million in 2029, indicating widespread integration across entertainment, marketing, and digital content creation.

- By 2030, the total market size will climb to $6,562.5 million, driven by advancements in AI-generated video and voice synthesis.

- The market nearly doubles between 2030 and 2031, reaching $9,351.5 million, reflecting accelerating demand and investment.

- In 2032, market valuation jumps to $13,325.9 million, underscoring mainstream adoption and expanded commercial use cases.

- By 2033, the Global DeepFake AI market peaks at $18,989.4 million, supported by dominant software solutions and fast-growing services offerings.

Deepfake Growth Statistics

- Total deepfake files shared online are expected to hit ~8 million by 2025.

- Deepfake fraud attempts jumped 3,000% in 2023.

- Synthetic voice fraud in insurance jumped 475% in 2024.

- Deepfake activity rose 680% year‑over‑year in 2024.

- Deepfake videos increased 550% from 2019 to 2024.

- Deepfakes now account for ~6.5% of all fraud attacks.

- There were 2,031 verified deepfake incidents per quarter in 2025.

- One deepfake attack occurred roughly every five minutes in 2024 worldwide.

Incident Projections 2025–2030

- Deepfake calls are projected to increase +155% in 2025.

- Deepfake‑related fraud is expected to grow +162% in 2025.

- Contact center fraud exposure may reach $44.5 billion in 2025.

- Financial sector deepfake scam attempts are reported by 53% of professionals by 2024.

- Projected deepfake fraud losses in the U.S. could hit $40 billion by 2027.

- Deepfake sophistication is expected to outpace many detection systems by 2030, per industry analysis trends.

- Continuous growth of deepfake creation tools likely fuels more frequent attacks.

- Cross‑border deepfake incidents are complicating law enforcement response.

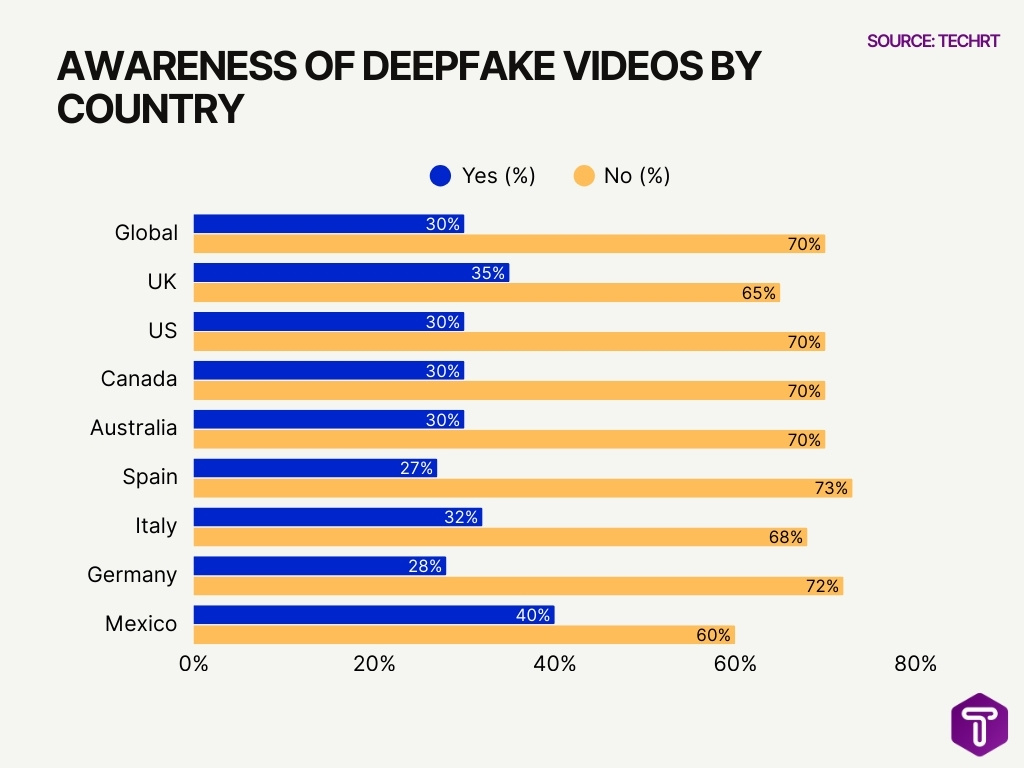

Global Awareness of Deepfake Videos by Country

- Global awareness remains low, with only 30% of people saying they know what a deepfake video is, while 70% report no awareness.

- The UK shows slightly higher awareness, where 35% of respondents recognize deepfake videos, compared to 65% who do not.

- In the United States, awareness mirrors the global average, with just 30% answering yes and 70% saying no.

- Canada and Australia follow the same pattern, each recording only 30% awareness and 70% lack of familiarity with deepfake videos.

- Spain reports one of the lowest awareness levels, with only 27% aware of deepfake videos and 73% unaware.

- Italy performs marginally better, where 32% of respondents understand what a deepfake video is, while 68% do not.

- In Germany, awareness remains limited, with just 28% saying yes versus 72% responding no.

- Mexico stands out globally, showing the highest awareness rate in the dataset at 40%, although a 60% majority still lack understanding.

- Across all surveyed regions, more than half of respondents are unfamiliar with deepfake videos, highlighting a significant global awareness gap.

- The data underscores a growing education and media-literacy challenge, as deepfake technology adoption is advancing faster than public understanding.

Financial Loss Figures

- More than 4.2 million fraud reports have been filed since 2020, with large losses.

- These reports correlate to over $50.5 billion in losses.

- Consumers lost $27.2 billion to identity fraud in 2024.

- Deloitte and industry sources project U.S. deepfake fraud losses at $40 billion by 2027.

- Individual victims often lose $20,000 on average in bank account scams.

- Deepfake scam investment losses (e.g., a fake CFO video caused a $25 million payout).

- Deepfake fraud represents a growing slice of global financial crime.

- Deepfake attacks in some sectors drive costs that far exceed earlier projections.

Detection Accuracy Rates

- Just 0.1% of people can accurately distinguish deepfakes from real media in controlled tests, even when primed to look for them.

- In real‑world settings, human detection of deepfake videos is generally below 30% accuracy, meaning most users can’t reliably spot manipulated content.

- Academic benchmarks like Deepfake‑Eval‑2024 show that many detection models’ performance drops by 45–50% on real‑world deepfakes versus lab tests.

- A large survey found that traditional detection tools saw significant accuracy declines when deepfakes were generated with newer AI models.

- Security AI systems often outperform humans but still miss subtle manipulations, especially when audio and visual are combined.

- The Deepfake Detection Challenge’s best model reached around 65% accuracy on a 4,000‑video holdout set, showing room for improvement.

- Detection drops further when deepfakes use multi‑modal cues (face, voice, expression) concurrently.

- Users overestimate their ability to spot fakes; many believe they can detect deepfakes but fail objective tests.

Voice Deepfake Trends

- Voice cloning is now the most common deepfake attack vector, driven by low cost and automation.

- Voice deepfakes can be created in ~20–30 seconds of training audio, enabling rapid impersonation.

- Voice phishing (vishing) scams surged 442% in late 2024, fueled by more convincing synthesized voices.

- AI audio manipulations contribute significantly to credential theft and account takeovers.

- Companies report deepfake voice incidents spanning sectors such as banking, telecom, and health.

- Many voice deepfakes bypass legacy fraud detection systems because they mimic vocal nuances.

- North America saw a 1,740% surge in deepfake fraud cases, with voice cloning central to that increase.

- Email and SMS tied to voice deepfake campaigns have grown as phishing techniques.

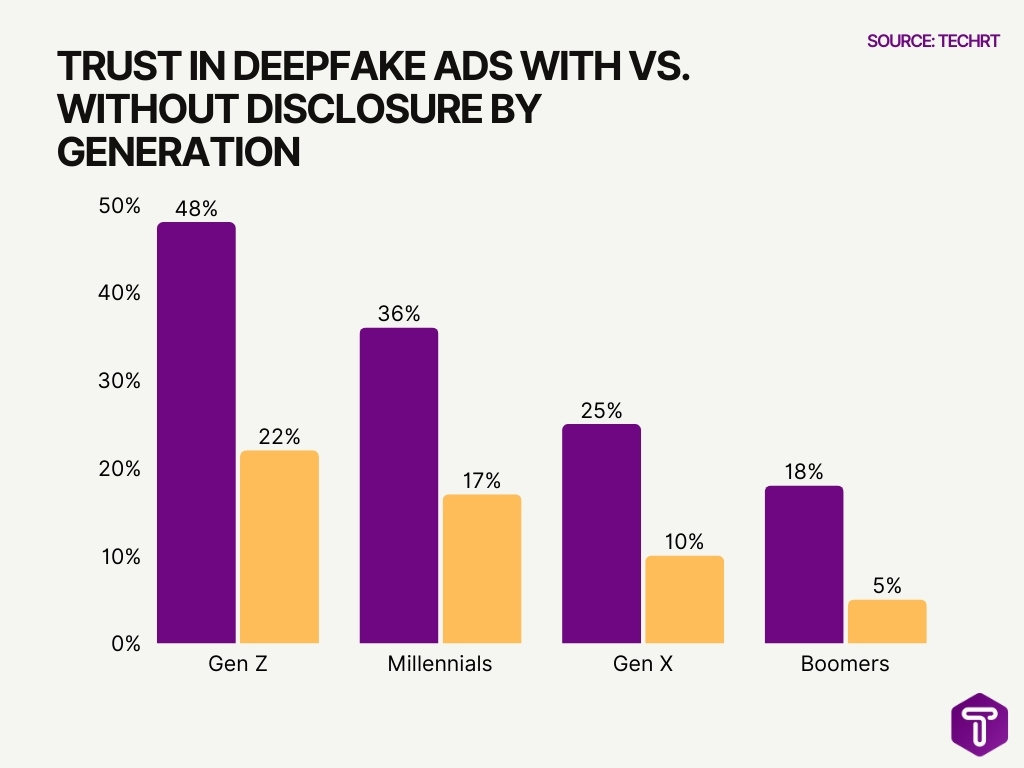

Trust in Deepfake Ads Increases Sharply With Disclosure

- Trust in deepfake ads is significantly higher when disclosure is present across all generations, highlighting transparency as a key trust driver.

- Gen Z shows the highest trust levels, with 48% trusting deepfake ads with disclosure, compared to just 22% without disclosure.

- Among Millennials, trust reaches 36% with disclosure, while it drops to 17% when no disclosure is provided.

- Gen X consumers remain cautious, as only 25% trust disclosed deepfake ads, and trust falls further to 10% without disclosure.

- Boomers exhibit the lowest confidence overall, with just 18% trusting ads with disclosure and a minimal 5% without disclosure.

- Across generations, disclosure nearly doubles or triples trust levels, underscoring the importance of ethical labeling of AI-generated content.

- The data indicate a clear generational trust gap, where younger audiences are more receptive to deepfake advertising than older cohorts.

- Lack of disclosure consistently erodes trust, reinforcing that undisclosed deepfake ads pose reputational and credibility risks for brands.

Video Deepfake Stats

- Quarterly, there are ~2,031 verified deepfake incidents, marking a 317% increase over earlier 2025 levels.

- Video deepfakes have risen 550% since 2019 as generative tools improve.

- More than 60% of consumers encountered a deepfake video within a year, per surveys.

- Real‑world deepfake datasets show modern fakes are diverse in language and content, complicating detection.

- AI video manipulation tools are increasingly public and easy to use without coding skills.

- Some malicious actors stream or embed live deepfake videos for scams and misinformation.

- Deepfake videos often combine synthetic audio and visuals, making them harder to spot than voice‑only fakes.

- As deepfake production tools evolve, lower‑budget fakes are becoming high enough quality to fool untrained viewers.

Celebrity Targeting Numbers

- Celebrities were targeted 47 times in Q1 2025, an 81% increase compared with all of 2024.

- Public figures like Taylor Swift saw explicit deepfake imagery spread to millions of views before removal.

- Media personalities and entertainers are frequent targets due to their high recognizability.

- Apart from pornographic misuse, some brands and public figures were deepfaked in fake commercials.

- Targets include actors, musicians, and social influencers whose likenesses can be monetized.

- Video deepfakes of celebrities often propagate across social platforms rapidly before moderation.

- Female public figures disproportionately face non‑consensual deepfake content.

- Lawsuits over unauthorized use of celebrity deepfakes are on the rise.

Political Deepfake Cases

Politicians faced 56 deepfake attacks in Q1 2025 alone.

- Deepfake incidents totaled 179 in Q1 2025, exceeding all of 2024 by 19%.

- Politicians account for 36% of all deepfakes since 2017, with 143 cases.

- Donald Trump endured 25 deepfake incidents, 18% of politician cases.

- Joe Biden suffered 20 deepfake cases, many during elections.

- 78 election deepfakes targeted voters in 2024 globally.

- 38 countries saw election-related deepfakes since 2021, affecting 3.8 billion people.

- 82 political deepfakes hit 38 countries in one year, per Recorded Future.

- 76% of politician deepfakes served political purposes.

- 92% of election deepfakes spread via social media.

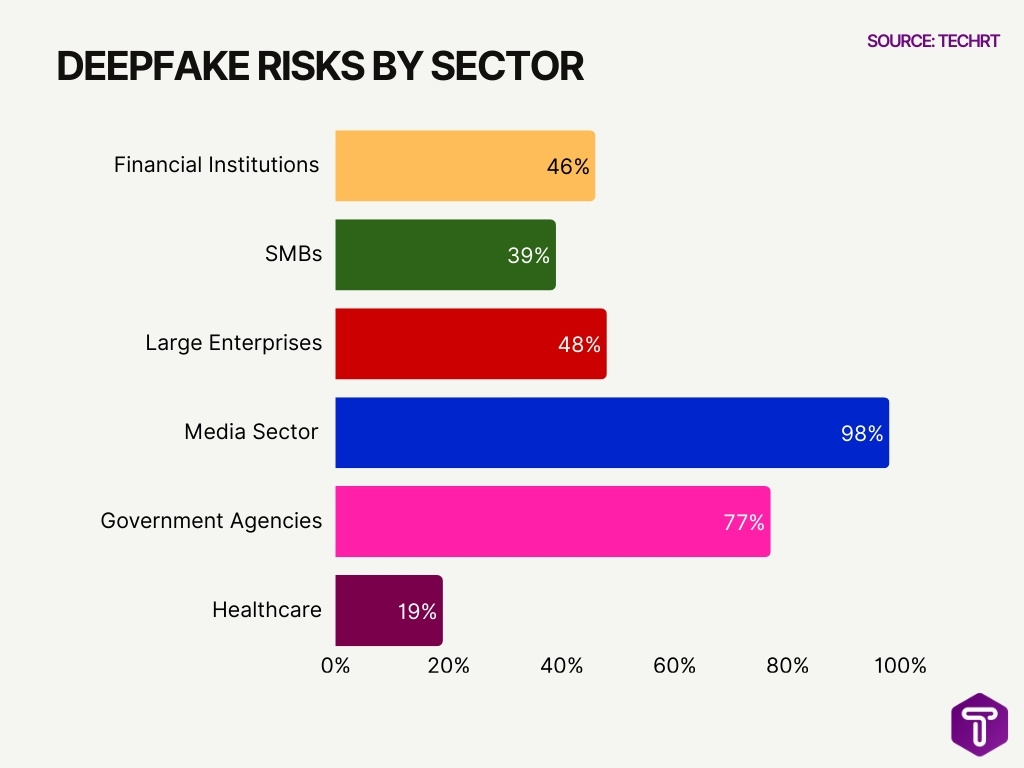

Sector Vulnerability Breakdown

- Financial institutions saw 46% affected by deepfake fraud in the past year.

- Contact centers experienced 1,300% surge in deepfake voice attacks targeting lines.

- SMBs (251-500 employees) reported 39% audio deepfake incidents, lower than larger firms.

- Large enterprises detected 48% video deepfakes in mid-sized ranges due to advanced tools.

- The media sector views deepfakes as a major reputational risk, with 98% of videos pornographic.

- Government agencies faced deepfakes in 77% of voter encounters pre-2024 elections.

- Healthcare accounts for 19% of deepfake targets via fake telehealth impersonations.

- Retail lost $100 billion to fraud schemes, including deepfake returns in 2023.

- Financial services report an average of $603,000 losses per deepfake incident.

- Deepfakes now comprise 6.5% of all fraud attacks, up 2,137% since 2022.

Creation Accessibility Facts

- Deepfake creation tools have become widely available, lowering the barrier to entry for both benign and malicious users.

- Researchers have documented ~35,000 publicly downloadable deepfake model variants on platforms.

- These models have been downloaded nearly 15 million times, indicating widespread interest and access.

- Some deepfake creation requires as few as 20 images and ~15 minutes on consumer hardware.

- This accessibility has enabled non‑experts to generate professional‑level synthetic media with few resources.

- Easy access increases risks of malicious uses, from scams to reputational manipulation.

- Creation tools now target multiple media types (voice, video, image) through integrated platforms.

- As a result, governments and tech platforms are evaluating requirements for usage transparency and consent tracking.

Organizational Preparedness Levels

- Surveys show ~80% of companies lack a clear strategy to respond to deepfake attacks.

- Adoption of enterprise deepfake detection tech is rising but uneven across sectors.

- In financial services and media, preparedness tends to be higher due to direct fraud exposure.

- Many organizations prioritize employee training on AI threats, but efforts lag behind risk growth.

- Governments are investing in content verification and digital provenance initiatives to support institutional defenses.

- Cybersecurity frameworks increasingly include deepfake detection as a core threat vector.

- Smaller enterprises and nonprofits often lack both budget and expertise for countermeasures.

- Collaboration among firms and across industries is rising to share threat intelligence.

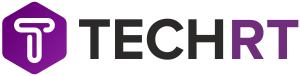

Surge in Identity Fraud Tactics Over the Past Two Years

- Fake or modified physical documents remain the most widespread identity fraud method, increasing from 49% in 2022 to 58% in 2024, highlighting continued reliance on traditional document-based fraud.

- Audio deepfakes have risen sharply, climbing from 37% to 49% over two years, reflecting the growing use of AI-generated voice impersonation in fraud schemes.

- Video deepfakes show the fastest growth, surging from 29% in 2022 to 49% in 2024, underscoring rapid advancements in generative AI–powered identity manipulation.

- Synthetic identity fraud remains persistently high, edging up from 46% to 47%, indicating its continued effectiveness in bypassing verification systems.

- The combined growth of audio and video deepfakes demonstrates a clear shift toward AI-driven identity fraud tactics, now matching or exceeding traditional methods.

- Overall, the data reveals that identity fraud is becoming more sophisticated, technology-led, and increasingly difficult to detect, posing heightened risks for organizations and consumers alike.

Consumer Awareness Stats

- Approximately 60% of consumers reported encountering a deepfake video in the past year.

- Despite encounter rates, human accuracy in spotting deepfakes remains low (often under 30% for realistic fakes).

- 71% of students want training on recognizing AI media, but only 38% have received it.

- Only 22% of students feel confident in discerning real vs. AI‑generated content.

- Public surveys show strong worry about victimization, with 3 in 5 people concerned about being targeted by deepfakes.

- Awareness levels vary widely by age and digital literacy.

- Many consumers overestimate their ability to detect altered media, creating a false sense of security.

- Educational campaigns and platform disclosures are expanding to boost public awareness.

Non‑Consensual Content Prevalence

- An estimated 96–98% of deepfakes online are intimate images without consent, highlighting a major abuse category.

- Non‑consensual content increased by ~1780% from 2019 to 2024 per law enforcement data.

- Public concern around these violations remains high, with many fearing victimization.

- Surveys show some portion of the population holds neutral or dismissive views about sharing non‑consensual deepfakes, a troubling social trend.

- Regulatory reforms in countries have criminalized deepfake possession and exploitation.

- Non‑consensual deepfakes disproportionately target women and marginalized groups.

- Advocacy groups call for stronger platform enforcement and legal penalties to address this abuse.

- Technological tools are emerging to flag and remove non‑consensual content, though challenges remain.

Frequently Asked Questions (FAQs)

How many deepfake files are projected to exist online by 2025?

Projected around 8 million deepfake files will be online by 2025, up from approximately 500,000 in 2023.

By what percentage did deepfake fraud attempts increase in 2023?

Deepfake fraud attempts surged by 3,000% in 2023 compared with the previous year.

What was the estimated global deepfake AI market size in 2024 and its projected value by 2030?

The global deepfake AI market was about $572.3 million in 2024 and is projected to reach approximately $5.29 billion by 2030 at a ~44.8% CAGR.

What percentage of consumers have encountered a deepfake video in the last year?

About 60% of consumers reported encountering a deepfake video within the past year.

What share of the global deepfake AI market did North America hold in 2024?

North America held the largest regional share at 34.8% of the global deepfake AI market in 2024.

Conclusion

Deepfakes and AI‑generated media have evolved into a global force reshaping digital trust, fraud risk, and media credibility. From rising market valuations and broad accessibility to disparities in organizational readiness and uneven public awareness, the data underscores the complexity of this phenomenon. Non‑consensual content, especially intimate deepfakes, remains a critical social harm with legal and ethical implications.

While technology and regulation continue to advance, the challenge for businesses, governments, and consumers is clear: adapt quickly and educate broadly to mitigate deepfake harms. The coming years will determine whether society can balance innovation with responsibility in the age of synthetic media.

Leave a comment

Have something to say about this article? Add your comment and start the discussion.