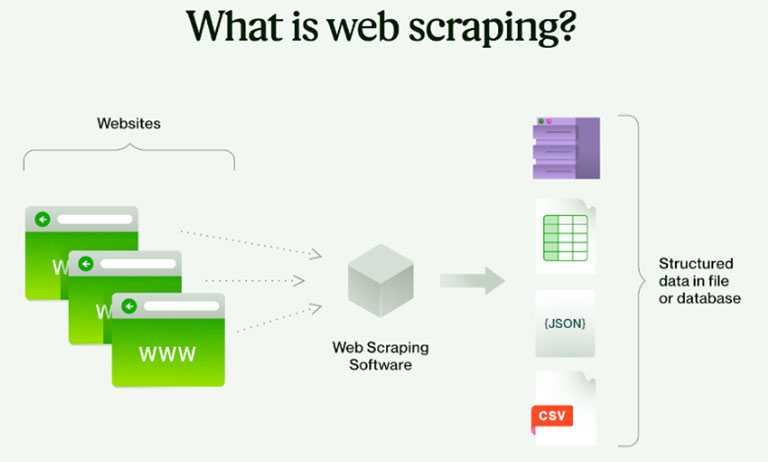

In today’s digital landscape, data holds immense value, and the practice of web scraping has emerged as a crucial technique for those seeking to collect information online. Utilizing a scraper, a type of software designed to automate data extraction from websites, can significantly streamline this process.

From compiling market research data to monitoring competitors’ pricing strategies or collating news content, the effective application of a scraper can be a time-saving measure that yields rich insights.

This guide aims to walk you through the fundamental steps of employing a scraper, highlighting the selection of appropriate tools and addressing the various challenges you might encounter.

What Are Web Scrapers?

A web scraper is a specialized software tool designed to automate the process of extracting data from websites. It navigates the web, accesses specific pages, and then harvests required information from these sites. This could range from text and images to complex data structures like product listings, user reviews, or even social media posts. Typically, a scraper works by identifying and parsing the HTML or XML code of web pages to extract the relevant content.

It’s a powerful tool for businesses, researchers, and individuals who need to gather large amounts of online data efficiently. Scrapers are widely used in various applications, such as market research, price comparison, lead generation, and content aggregation. Their ability to quickly and systematically collect online information makes them invaluable in an era where data is a critical asset.

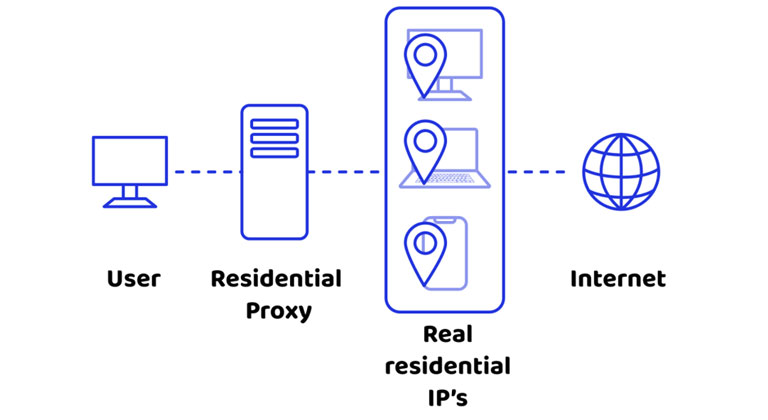

Choosing the Right Tools: The Role of a Residential IP Proxy Service

The first step in effective web scraping is choosing the right tools. This includes both the scraper software and the type of proxy service you use. A residential IP proxy service is particularly crucial in scraping activities. These services provide IP addresses that are associated with physical locations, making your scraping activities appear more organic and less likely to be blocked by websites.

Residential proxies are ideal for bypassing anti-scraping measures, as they rotate IP addresses, reducing the risk of detection and IP bans. This is especially important when scraping websites with strict data gathering policies or when you need to access geo-restricted content.

Setting Up Your Scraper

- Select a Scraper Tool: Choose a scraper tool that fits your technical skill level and the complexity of your scraping needs. There are various tools available, from simple browser extensions to sophisticated software like Octoparse or Scrapy.

- Define Your Data Requirements: Clearly define what data you need to scrape. This could be product prices, stock levels, article text, or social media posts. Knowing exactly what you need will help you configure your scraper more effectively.

- Learn the Basics of HTML and CSS: Understanding the basics of HTML and CSS can be incredibly helpful, as it will allow you to identify the specific elements of a webpage that you want to scrape.

Configuring and Running Your Scraper

Configuring and operating a scraper requires a systematic approach to ensure efficient and accurate data extraction. Here’s an expanded guide on how to set up and run your scraper effectively.

Input Target URLs

- Gather URLs: Start by collecting the URLs of the websites from which you need to scrape data. This could be a single website or multiple sites, depending on your project.

- Batch Processing: Many advanced scraping tools allow you to input a list of URLs for batch processing, enabling the simultaneous scraping of multiple pages. This feature is particularly useful for large-scale data extraction projects.

Set Up Data Extraction Rules

- Identify Data Points: Determine the specific pieces of information you need to extract. This could range from text and images to pricing information and user reviews.

- Select HTML Elements: Use the scraper’s selector tool to pinpoint the HTML elements that contain your desired data. This might involve a bit of trial and error to ensure you’re capturing the right content.

- Customize Extraction Patterns: Some scrapers allow you to create custom extraction patterns or use regular expressions (regex) for more complex data structures.

Integrate Proxy Settings

- Choose a Proxy Service: Select a residential IP proxy service that offers a pool of IP addresses to mask your scraping activities.

- Configure Proxy Settings: Input the details of your proxy service into the scraper. This typically includes the proxy address, port number, and any required authentication details.

- IP Rotation: Ensure that your proxy service supports IP rotation, which is crucial for avoiding detection and IP bans during scraping sessions.

Run Tests

- Pilot Testing: Conduct a pilot test by running the scraper on a small set of pages. This helps in identifying any issues in data extraction or formatting.

- Analyze Test Results: Carefully review the extracted data for accuracy and completeness. Check if the data is being pulled in the correct format and whether any important information is being missed.

- Adjust Configurations: Based on the test results, fine-tune your scraper settings. This might involve adjusting the selectors, modifying the delay between requests, or tweaking the proxy settings.

Monitor and Troubleshoot

- Continuous Monitoring: Once you start the full-scale scraping operation, continuously monitor the process for any errors or blocks.

- Handle Captchas and Blocks: Implement strategies to handle captchas and website blocks, which may include using captcha-solving services or adjusting request rates.

- Data Validation: Regularly validate the scraped data for quality and relevance, ensuring that the scraper continues to perform as expected.

Conclusion

Web scraping with a scraper can be a powerful way to gather data from the internet. By combining the right scraping tool with a reliable residential IP proxy service, you can efficiently collect the data you need while minimizing the risk of detection. Remember to always scrape responsibly, respecting legal and ethical guidelines, and to be prepared for potential challenges that may arise during the scraping process.

Leave a comment

Have something to say about this article? Add your comment and start the discussion.